More data is being created, processed and stored today than we could ever imagine. Software, algorithms, IoT devices and other machines have joined people generating data – in different forms, using different methods and for different purposes. Over 64 ZB of data was created in 2020, as per an IDC report.

Smart and timely use of data gives you insights that unlock incredible value and drive greater efficiencies for your business. However, these efficiencies require faster-than-ever, high-capacity and reliable memory, compute and storage resources to meet the demands of mission-critical workloads. And it’s not just one over the other – both memory and storage need to evolve, integrate and converge if the complexity and challenges of today’s streaming, multidimensional datasets and real-time analytics are to be overcome.

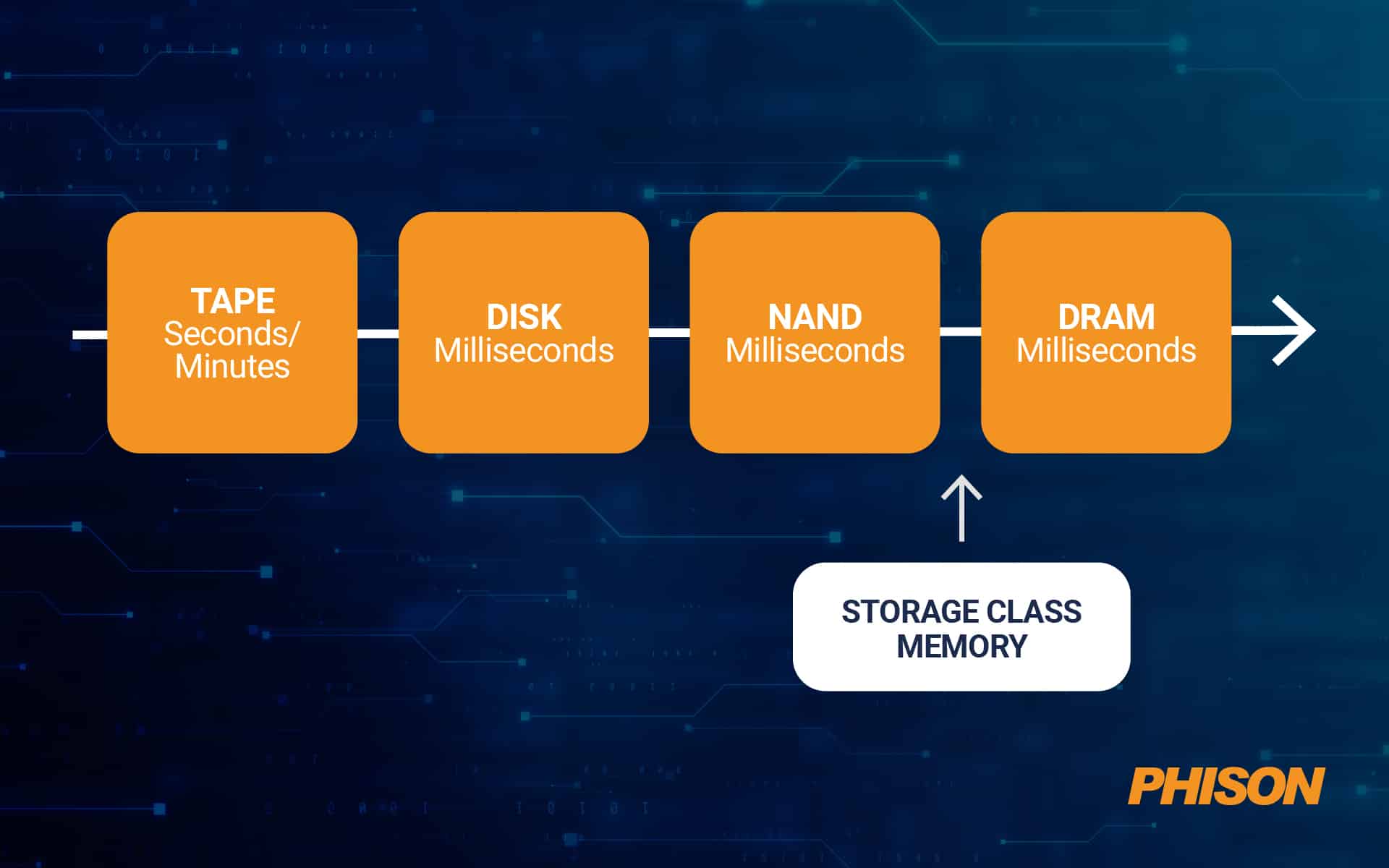

The memory/storage divide was born out of a trade-off between speed and persistence (even today, IDC estimates only 2% of the 64 ZB is saved). However, the lines between memory and storage are increasingly blurred by breakthrough developments in technologies that improve storage transfer speed. For example, storage class memory (SCM) is a form of persistent memory that acts as a sort-of bridge between NAND flash and DRAM by storing non-volatile memory in a similar way to DRAM. And it does so at 10x the speed of NAND flash drives and at half the cost of DDR SDRAM.

Source: Pure Storage

This enables complex database, analytics, file storage and online transaction processing (OLTP) workloads across different use cases by reducing latency and enabling high-performance storage of unstructured datasets.

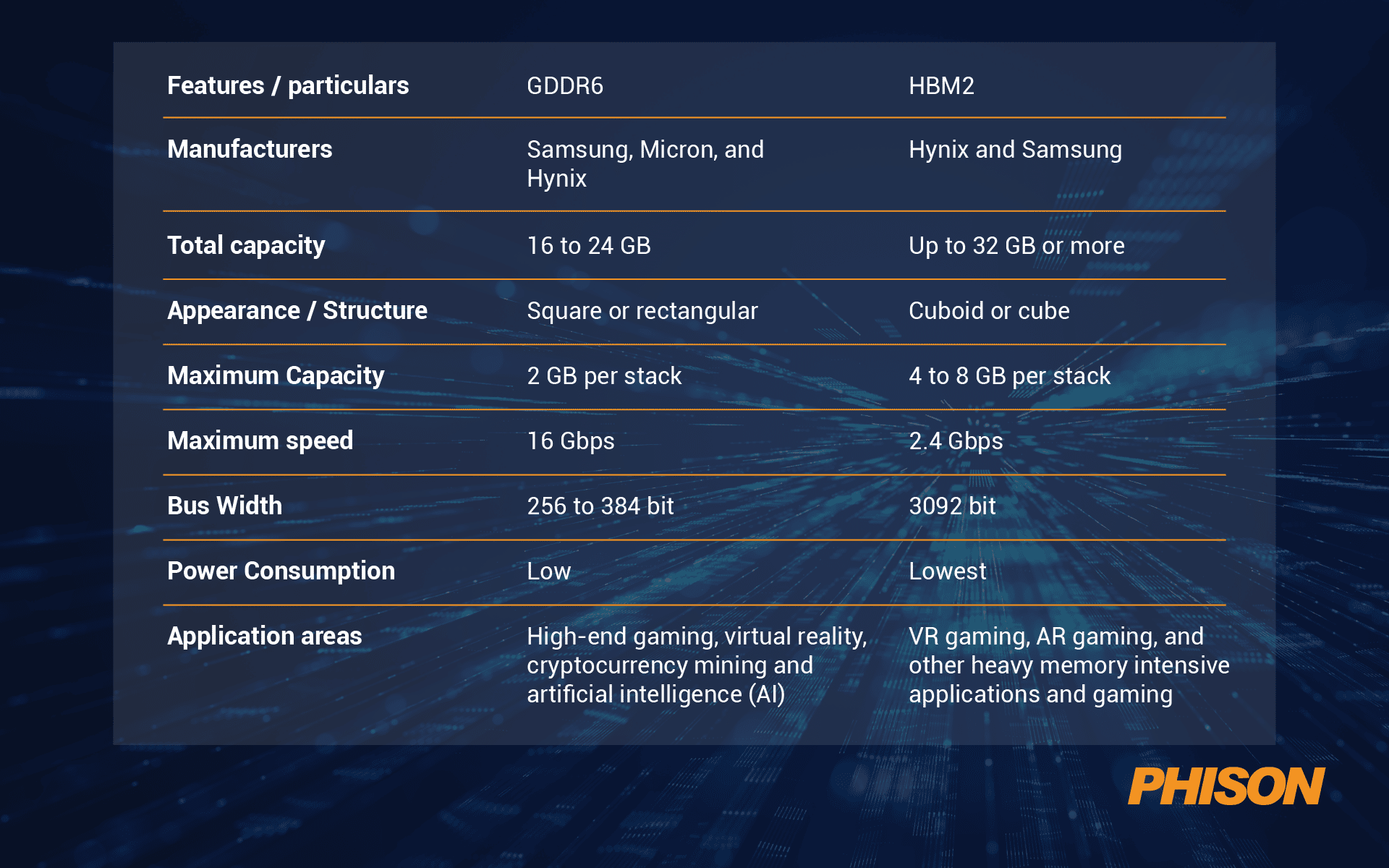

More specifically, high-performance memory comes in two flavors:

-

-

- Graphic Double Data Rate (GDDR) – a cost-optimized, high-speed standard with applications in AI and cryptocurrency mining

- High-Bandwidth Memory (HBM) – a high-capacity, power-efficient standard with applications in AR/VR, gaming and other memory-intensive workloads

-

Source: Face of It

On the storage front, fast SSDs are increasingly becoming the core of storage systems within high-performance computing (HPC) and hyperconverged infrastructure (HCI) architectures due to their low-latency and high-throughput capabilities.

SSDs with Phison’s E18 NVMe controllers that run on PCIe Gen 4.0 interface are setting systems on fire with hitherto unheard-of speeds of 7.4/7.0 GB per second for sequential reads and writes. Phison is also slated to ship custom PCIe Gen 5.0 SSDs later this year for early adopters of the standard.

Let’s see how diverse industries – from life sciences to telecom, from manufacturing to fintech – are using the right mix of high-performance storage and memory to drive their business functions.

Artificial Intelligence (AI) and Machine Learning (ML)

AI-based algorithms have penetrated pretty much every industry. Deep learning and ML drive data gathering and processing in medical treatment, loan disbursement, gaming, recruitment and almost any business function you can think of. AI is a processor- and memory-intensive technology that requires GPUs (which are more efficient than CPUs at executing complex mathematical and statistical computations) in many large-scale implementations.

GPUs in turn demand high-performance memory for the “training” and “inference” operations of the ML algorithm.

You need large datasets to train the algorithm or neural network so that it can “learn” its task well. Frequently, these datasets are so large they need to be split across multiple servers or cloud systems, run in parallel, or be fed into the system over the course of days. This means memory is a bottleneck for “feeding” (or ingesting) the data to the algorithms. You need power-efficient, high-bandwidth and high-capacity memory and large, fast storage solutions.

Before the ingest process begins, data needs to be collected from a variety of sources. Since this data can be unstructured or in various formats incompatible with each other, it must be transformed into a single acceptable format. The speed of transformation again depends on the quality (and quantity) of installed memory and storage. Unlike the ingest process, storage access during transformation is varied, needing both sequential and random reads and writes.

This problem is solved with NVMe SSDs – they enable you to create and manage larger datasets by separating the storage part from the compute nodes with dynamic provisioning. NVMe controllers also allow for parallel access between the GPU and storage media, accelerating the epoch time of the ML model (or the time it takes to make a complete pass through the entire dataset) and maximizing GPU utilization.

After the AI model is trained, the inference phase starts – this is where the algorithm presents its output and aids decision-making. AI/ML inferencing needs high-bandwidth and low-latency memory to produce answers in real time. Here, speed and response time gain higher priority.

High-Resolution Streaming Video (Media & Entertainment)

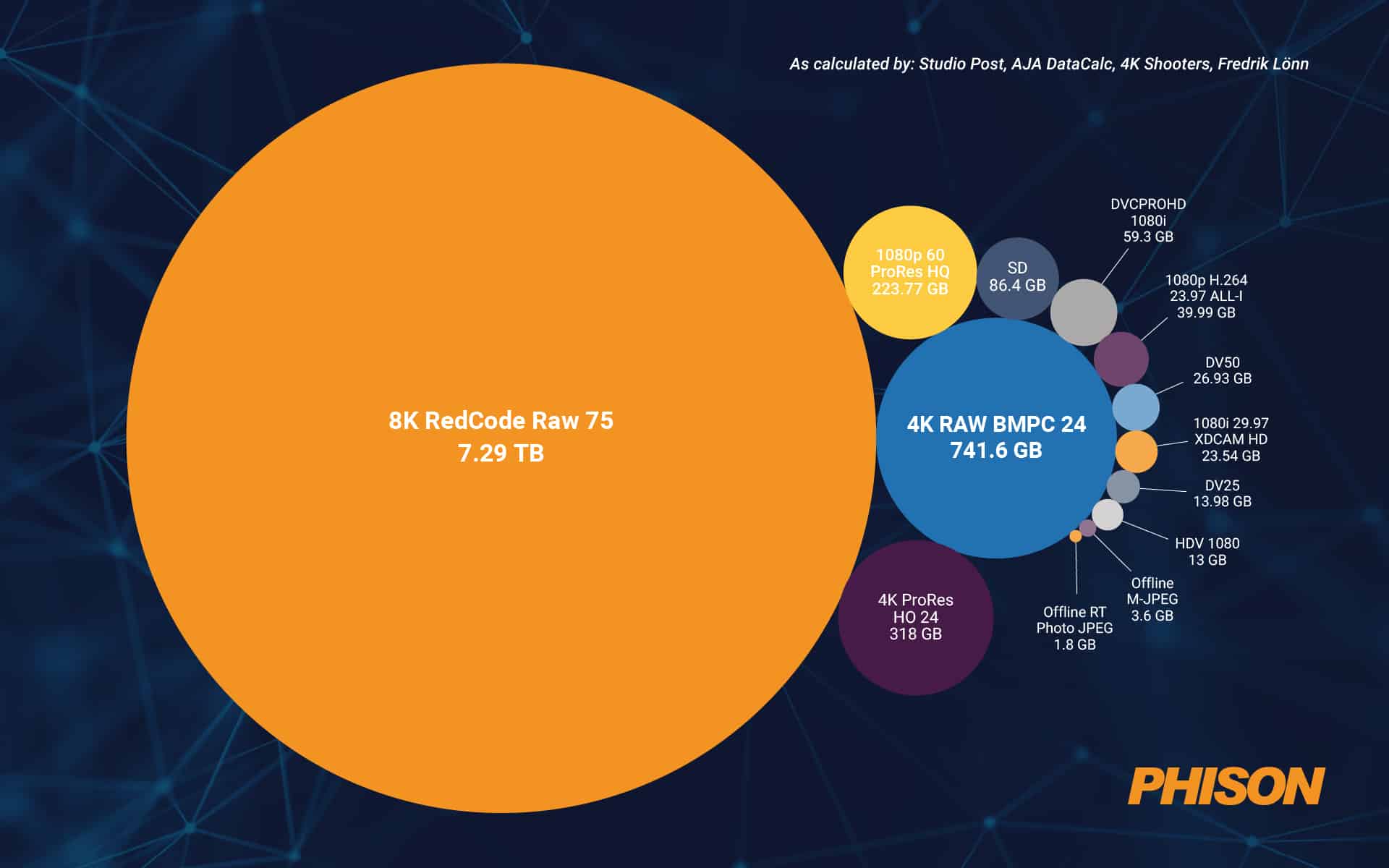

Video is the elephant in the room when it comes to file size and data volume growth. While better resolutions improve the viewer experience, they significantly increase the amount of data stored and transferred over networks. Streaming HD (1080p) videos over YouTube or Netflix “costs” 1.6 GB and 3 GB an hour of data respectively. When you up the resolution to 4K, consumption rises to 2.7 GB and 7 GB per hour respectively. The adoption and availability of 4K content is picking up speed as public broadcasters and OTT providers increase its use in programming and distribution.

Source: Signiant

While storage of video and media for infotainment is largely the concern of cloud file storage providers these days (thanks to the availability of fast and high-bandwidth connections), consumers and enterprises looking to move towards larger capacity SSDs for read-intensive workloads should be aware of the tradeoffs. Most of these SSDs are built with QLC NAND flash chips and tend to have higher latency, lower endurance and lower performance than their TLC counterparts.

Gaming

Media and entertainment isn’t the only industry accelerating 4K/8K video adoption. High-end gaming calls for faster response times with zero lagging or buffering in addition to high-res graphics. Gaming enthusiasts and pros are often known to use multiple monitors with 4K/8K screens that need high-performance graphic cards and large frame buffers. HBM allows for a frame buffer size of 16 to 32 GB at a rate of 4096 I/O per second.

While 4K graphics are the standard for gaming rigs today, 8K is clearly the future. This will need bigger and faster in-console and external SSDs to store and read/write video at high speeds.

It’s no secret that modern video games are the biggest consumers of DDR RAM. With the proliferation of cloud gaming powered by data centers chock full of GPUs that enable realistic rendering, memory requirements keep going higher for both PC and console gaming.

Professional PC gaming often demands ultra-high resolution 4K/8K graphics where the GPU performs complex operations such as ray tracing and variable rate shading. These ops mandate zero latency. Lagging and buffering is a crime no serious gamer will forgive, making GDDR or HBM memory a non-negotiable requirement.

Then there is the question of storage. The average AAA game at 2K resolution takes up around 50 GB of space on the hard drive. With 4K resolutions becoming the norm, mainstream games that use high-res unique texture mapping and 3D photorealistic rendering assets easily take up 150 to 200 GB of storage space. This means that maintaining a standard collection of 20 or so games along with mods, extra packs and other downloadable content (DLC) needs 2 TB of space in the internal SSD at a minimum.

Along with large capacity, gaming SSDs must also have read speeds in excess of 5.5 GB/s, otherwise textures and objects near your characters appear after a delay. Contemporary PCIe Gen4 NVMe M.2 drives can achieve read speeds of up to 7 GB/s for large, contiguous files and 3 GB/s for smaller, scattered files.

Augmented Reality (AR) and Virtual Reality (VR)

AR & VR have had a slow but steady penetration in various industries – gaming, healthcare, engineering, architecture, construction, education and more. More than real-world applications, they are more extensively used in training and simulation. Virtual objects can be transposed onto real-life objects to understand how they might behave once built or installed.

High-end AR and VR headsets that are used for business-critical applications need ultra-high definition (UHD) displays with 3840×2160 resolution. These require powerful graphics cards with high-performance memory. A standard image of 4K resolution is 33.2 MB assuming it’s encoded with 32-bit RGB. A 4K VR display with a frame rate of 90 FPS mandates a data transfer rate of 3 GB/s for moving standard images – this translates to a read speed of 6 GB/s on the SSD for a pair of images. More detailed images require a bandwidth of up to 10 GB/s, which is out of reach of the SSDs available in the market today. (Phison’s E26 SSD, however, slated for release in late 2022, will reach and even exceed those read/write speeds.)

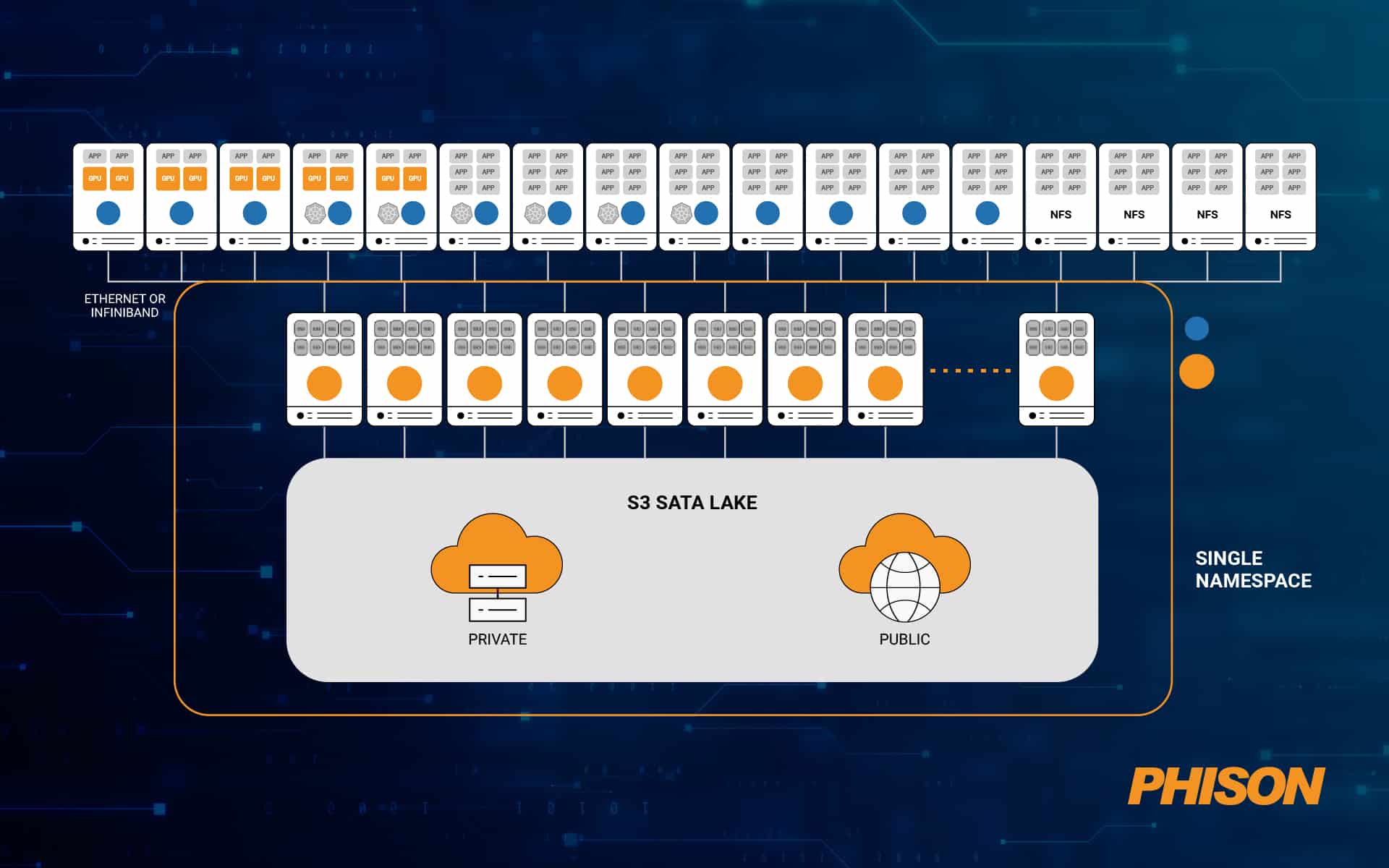

High Performance Computing (HPC)

Many fields today have specific, specialist business functions that have very high compute, storage and bandwidth needs. A lot of tasks in scientific research, finance, manufacturing, defense, energy and healthcare involve big data collection and processing with bleeding-edge performance.

Game development, video editing, VR simulation and CAD are just a few of the medium-level workloads that use significant I/O resources and bandwidth. These need powerful computing and cloud systems that together constitute HPC. HPC involves supercomputers that forecast weather, determine derivative prices and control satellites – these require storage, memory and bandwidth many times more than average. HPC is now a core part of operational workflows, not just an IT cost center. It calls for a reliable, flexible, scalable and efficient storage infrastructure.

A typical application running on HPC – such as a flight or molecular interaction simulation – would need huge amounts of data just to get it loaded and started. Combined with real-time user interactions, this requires low latency, high-bandwidth and extreme capacity storage infrastructure. In a HPC cluster, the amount of data read/written onto storage devices might be in the order of exabytes (EB). For this reason, data processing for storage needs to be offloaded from the CPU to avoid interrupting computational operations.

Further, HPC applications have two kinds of data – “hot” data, which is accessed frequently (and therefore needs to be stored on SSDs close to the compute nodes) and “cold” data, which is accessed infrequently (and therefore can reside on storage arrays).

Present-day storage drives employ a parallel file system (PFS) so that every node in the cluster can communicate in parallel with every drive with minimal wait times. Only the latest SSDs with NVMe controllers are able to service all application requests without lag.

Source: WEKA

Automotive

The future of the automotive industry is CASE – Connected, Autonomous, Shared, Electric. Each of these characteristics and functions places a high demand on modern cars for data collection, processing and storage. Conventional cars today already run on data as much as they do on fuel – every car generates in excess of 1 TB of data per day:

-

-

- Telematics and V2X applications – 8 GB to 64 GB

- Infotainment – 64 GB to 256 GB

- HD mapping – 16 GB to 128 GB

- Dashboard camera – 8 GB to 64 GB

- Digital instrument cluster – 8 GB to 64 GB

- Human Machine Interface (HMI) – 32 GB to 64 GB

- Augmented Reality (AR) – 16 GB to 128 GB

- Advanced Driver Assistance System (ADAS) – 8 GB to 128 GB

- Accident recording – 8 GB to 256 GB

-

Less than a decade from now, we’ll have 90 million connected autonomous vehicles (AVs) that will collectively generate 1 ZB of data a day. How are we going to make sense of – let alone manage – these enormous amounts of data? The answer is NAND flash-based automotive storage solutions such as eMMC, SSD and UFS that work in sync with a centralized cloud and AI-based applications.

Accelerating innovation with Phison

Phison is a market leader in NAND flash controller and storage solutions – the basis for each and every one of the real-world applications we’ve discussed today. It offers client, enterprise and embedded solutions that stretch the limits of speed, capacity, reliability and efficiency in memory and storage.

Phison’s best-in-class SSD products are manufactured and optimized to custom specifications for performance, power and endurance, making them suited to the most complex, data-intensive and mission-critical applications in any industry. They deliver consistent performance and faster response times, directly improving the success rate of business workloads.