Data is one of the most important assets an enterprise has today. Organizations are creating and collecting more information than ever before, which can present challenges in storing, managing, accessing and analyzing it.

Fortunately, as enterprises evolve to see the potential benefits of expanding volumes of data, technology is also evolving to make possible a wealth of new tools for managing that data.

In this article, we’ll take a look at several innovations that are transforming—and improving—the way enterprise data is handled.

Zoned namespace SSDs

NAND flash-based solid state drives (SSDs) are a critical component in many enterprise storage ecosystems. Of course, NAND flash and SSD technology has advanced over the years, and the original architecture of some SSDs aren’t able to deliver the improved performance that today’s technology has enabled.

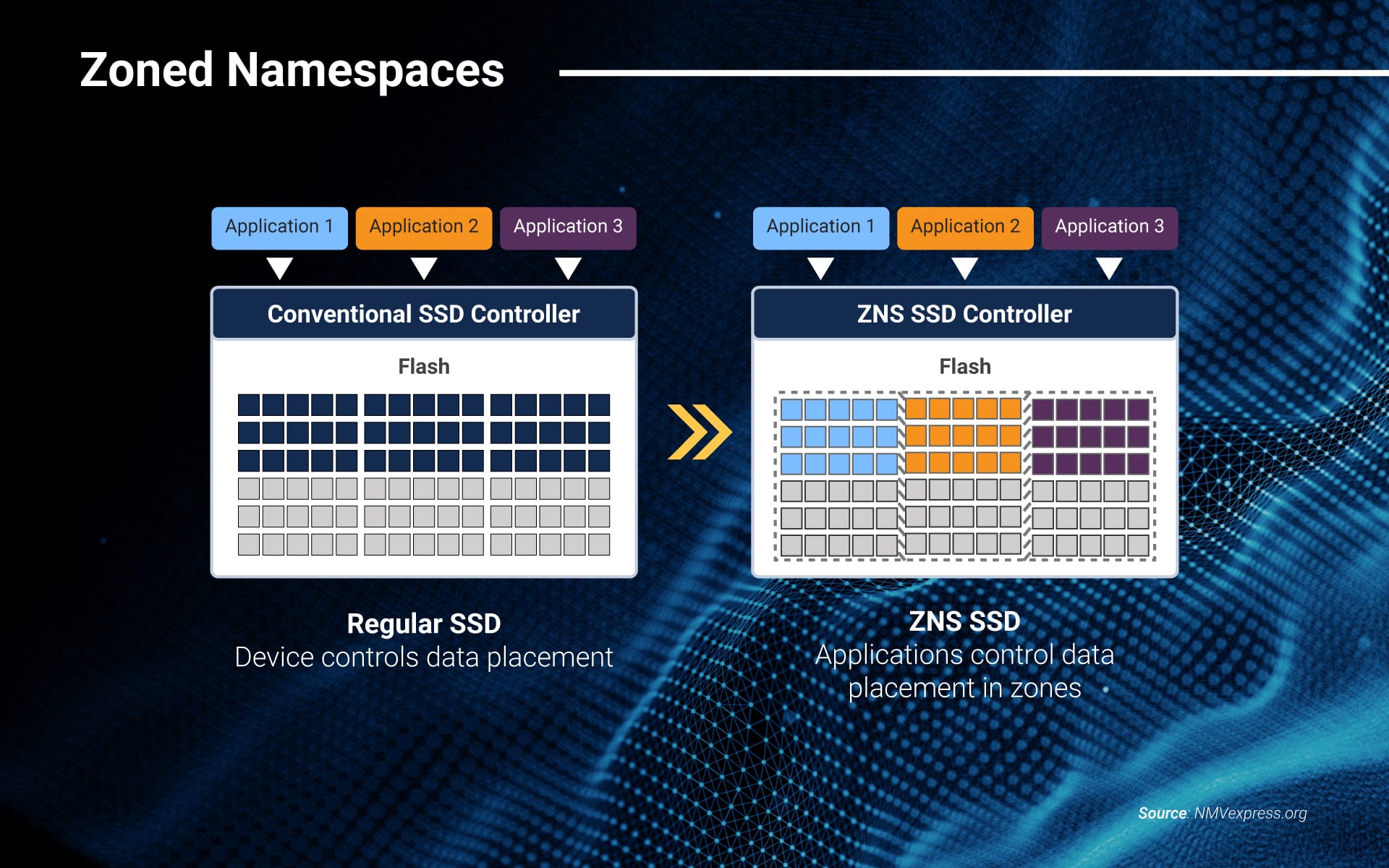

For instance, the flash blocks in NAND flash-based SSDs are limited to a finite number of writes. The data must also be written sequentially in the flash block, and if any data is erased in a particular block, that entire block must be erased before new data can be written on it. These functions are managed by the Flash Translation Layer (FTL), located in the SSD controller, which employs device-side write amplification, over-provisioning, DRAM and indirection to align the SSD storage blocks with the host. And while these actions allow the SSD to store data in any of the flash blocks, they also come with negative effects: higher write amplification, increased over-provisioning, decreased throughput and higher latency.

That’s all set to change, thanks to zoned namespace (ZNS). Developed by the NVMe Technical Work Group and released in 2020, ZNS is an industry standard that more effectively aligns the inner workings of an SSD with the host.

Source: NMVexpress.org

Instead of the host device controlling the placement of data into the SSD’s memory blocks, applications take over that control. The data is written into zones that align more efficiently with the media—which helps cut out the need for indirection overheads, over-provisioning and all the other operations the SSD controller used to write data efficiently.

By eliminating all the little tricks used by conventional SSD controllers, ZNS SSDs improve throughput, latency and SSD lifetime. They can also increase available capacity in the drive—by up to 20% according to some experts.

Compute Express Link (CXL)

Enterprises are demanding ultra-fast data processing speeds and advanced server performance. Along with these demands is a growing need for a solution that allows organizations to load-store across a number of different device types and memory classes.

Consider this: many of today’s servers, especially those of hyperscalers, contain components that come with their own on-board memory. Besides the main memory attached to a host processor, there also might be smart NICs, GPUs and storage SSDs. These devices have memory caches that often are underutilized, some by a lot. That means that throughout that system, there is a lot of memory lying idle. Multiply all that unused memory by thousands of servers and it becomes significant. These caches typically can’t interact unless they have a tool to connect them.

That’s where Compute Express Link (CXL) comes in. CXL is a standardized cache-coherent interconnect that allows organizations to connect all those memory caches together so they can be used more efficiently. The interconnector will leverage the PCIe Gen5 interface and organizations can choose to use either a CXL or PCIe protocol.

Using CXL results in improved utilization, which means organizations can get more out of the memory they already have. Cache coherency and the sharing of memory resources can help improve system performance and reduce infrastructure complexity.

NVMe over Fabrics (NVMe-oF)

Transport protocols for storage area networks (SANs) have evolved significantly over time. Different protocols were developed to connect to HDD-based SANs, such as iSCSI, Serial attached SCSI (SAS) and Fibre Channel Protocol (FCP). These protocols delivered enough performance for HDDs but aren’t sufficient for SSDs. After the HDD bottleneck was removed by replacing HDD with SSD, the protocols became the bottleneck. NAND flash-based storage needed something more to help organizations reap all the benefits of the technology.

NVMe over Fabric (NVMe-oF) expands NVMe onto fabrics such as InfiniBand, Fibre Channel and Ethernet. It encapsulates NVMe commands and transports the capsules over a storage network fabric. With this technology, organizations can access data both locally and over SANs more efficiently than they did over traditional protocols.

By delivering high-speed lossless data transfer over networks like Ethernet and Fibre Channel, NVMe-oF provides an even more effective connection between servers and storage and can also utilize CPU resources more efficiently. Using NVMe-oF, organizations can enjoy low-latency, high-performance storage that’s disaggregated from servers and managed as a single shared pool. That means IT can provision storage resources more granularly and flexibly than ever before.

Single Root I/O Virtualization (SR-IOV)

Virtualization has become a very important part of enterprise computing. In addition to improving server utilization, it can also help organizations reduce the need for physical hardware in the data center.

The Single Root I/O Virtualization (SR-IOV) specification aids virtualization by enabling a device such as a storage SSD to separate and isolate PCIe resources across a variety of virtual servers. Prior to this development, an SSD that shared resources with multiple virtual machines (VMs) would see a decline in performance as the VM manager’s processes to enable that sharing created an I/O bottleneck.

SR-IOV makes it possible to eliminate the need for the VM manager to manage resource sharing. Instead, a PCIe device such as an SSD controller can take over that function. Because the manager component no longer needs to process that operation, performance is optimized and can approach even the level of performance delivered by a bare-metal server.

How Phison can help

As the first company shipping PCIe Gen4x4 NVMe SSD solutions, Phison is the industry leader in enabling high-performance computing for bandwidth-hungry applications. In developing these solutions, Phison is helping to create the features that will meet tomorrow’s demand for ever-faster and better digital experiences:

-

-

- As data transfer speeds increase, Phison can provide customers with PCIe Gen5 SSD platforms customized to perform optimally in their applications.

- Phison is currently in development on a ZNS SSD and is committed to achieving the high performance, quality of service and efficiency that customers need.

- As SR-IOV allows multiple hosts to access a single SSD device, the traffic from each host could interfere with each other. Phison’s proprietary Multi-Function Quality of Service (MFQoS) can control that traffic and keep each host’s transfer speed consistent—for faster response times and excellent QoS.

- Phison is continually investing heavily into R&D to create SSD solutions that align with emerging technologies and ensure that its products are future-proofed and ready to power tomorrow’s innovations.

-