The terms artificial intelligence (AI) and machine learning (ML) are being used more and more in the computing industry, but even experienced IT practitioners may not be fully aware of the computing and storage infrastructure required to support the two technologies. This article examines the issue and offers insights into the ways that solid state drives (SSDs) enable the best AI and ML outcomes.

What are AI and ML?

The first step in understanding the true natures of AI and ML is to grasp that they are not the same thing. AI is about creating software that can think like a human being. ML involves getting software to learn new concepts and then to continue getting better at mastering these concepts. They are distinct but related, overlapping technologies.

AI and ML not new ideas, either. The computer visionary Alan Turing posited that machines could be made to think like people back in 1950. By 1959, AI pioneer Marvin Minsky was administering the MIT freshman calculus exam to a very early AI program. It passed. Movies have given us the murderously intelligent HAL 9000 in 2001: A Space Odyssey and the equally lethal Skynet in The Terminator. These examples are worth mentioning because fiction has informed the way we think about AI and ML, while also causing some confusion along the way.

Fortunately, we have not yet reached the age of Skynet, but our world is full of impressive examples of AI and ML at work. Most of these are not big or flashy, but no less impactful on business and our daily lives. A Robotic Process Automation (RPA) “bot,” for example, can use AI to perform tasks like reading email messages and filling out forms. ML drives processes like facial recognition in law enforcement or cancer diagnosis in the medical field.

How do AI and ML work?

While there are many varieties of AI and ML programming, at their cores, both technologies are based on pattern recognition. In the RPA email reading example, the bot is trained to recognize phrases in an email message that describe what it’s about. A message containing the words “payment” or “overdue” is meant for the accounting department.

The bot can also parse the email signature and use pattern recognition to determine if the message comes from a vendor (accounts payable) or a customer (accounts receivable). This type of capability is also useful in cybersecurity, wherein AI software can examine millions of data points coming from security logs and spot anomalous behavior that indicates an attack is underway.

ML similarly utilizes pattern recognition to get better at understanding a given area of knowledge. ML systems can learn about data and continuously get “smarter” without having to follow programmed code or specific rules. For example, an ML algorithm can “look at” a million images of trees and plants. At some point, the algorithm will teach itself the difference between a tree and a plant. The essential difference between AI and ML, therefore, is that AI has been taught to spot patterns while ML is still learning and getting better at spotting patterns.

All of this requires the handling of enormous amounts of data. To a certain extent, AI and ML are simply extensions of the big data paradigm. Big data and data analytics make it possible to interpret large, diverse data sets, discover visual trends and come up with new insights. AI and ML take the process a step further. They leverage existing big data analytics and data science processes, such as data mining, statistical analysis and predictive modeling to enable inferences, decision making and action steps based on big data.

Practically speaking, AI and ML comprises four separate processes, each of which involves data management:

- Data ingest—bringing data from multiple sources into big data platforms like Spark, Hadoop and NoSQL databases, the foundation of AI and ML workloads

- Preparation—making the data ready for use in AI and ML training

- Training—running the training algorithms of AI and ML software programs

- Inference—getting AI and ML software to perform its inferential workflows

Why NAND flash storage is essential for AI and ML

The central role of big data in AI and ML makes storage a critical success factor for these workloads. Without effective, flexible and high-performing storage, AI and ML software won’t perform well. Or at a minimum, the workloads will make poor use of compute and storage infrastructure.

For these reasons, NAND flash storage is the ideal medium for storage that supports AI and ML. To understand why, consider the storage requirements at each of the four stages of AI and ML.

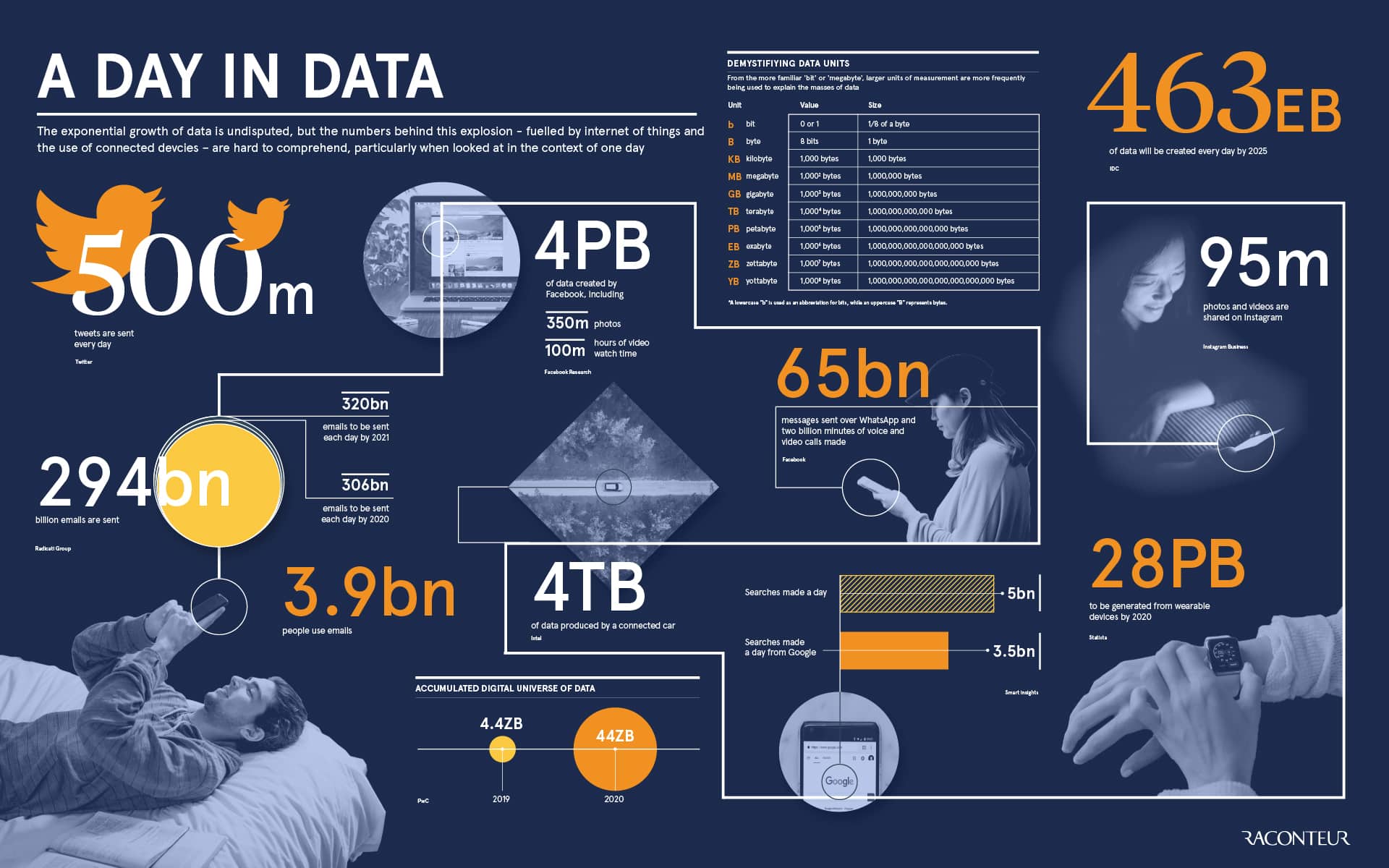

At data ingest, AI is taking in large-scale, highly varied data sets, including structured and unstructured data formats. Data can come from a potentially wide range of sources. Successful ingest requires a high volume of storage, measured perhaps in petabytes or even exabytes, but also one with a fast tier for real-time analytics. Reliability is critical here, as it is with the other three stages. NAND flash provides the best mix of reliability and processing speed.

The data preparation stage of AI and ML means transforming the raw, ingested data and formatting it for consumption by AI and ML software’s neural networks in the training and inference stages. File input/output (I/O) speed is important at the data preparation stage. NAND flash performs well in this use case.

The training and inferencing stages of AI and ML tend to be compute intensive. They require high-speed streaming of data into training models in the software. It’s an iterative process with a lot of stops and starts, all of which can strain storage resources if they are not suited to the task.

How SSDs enable AI and ML success

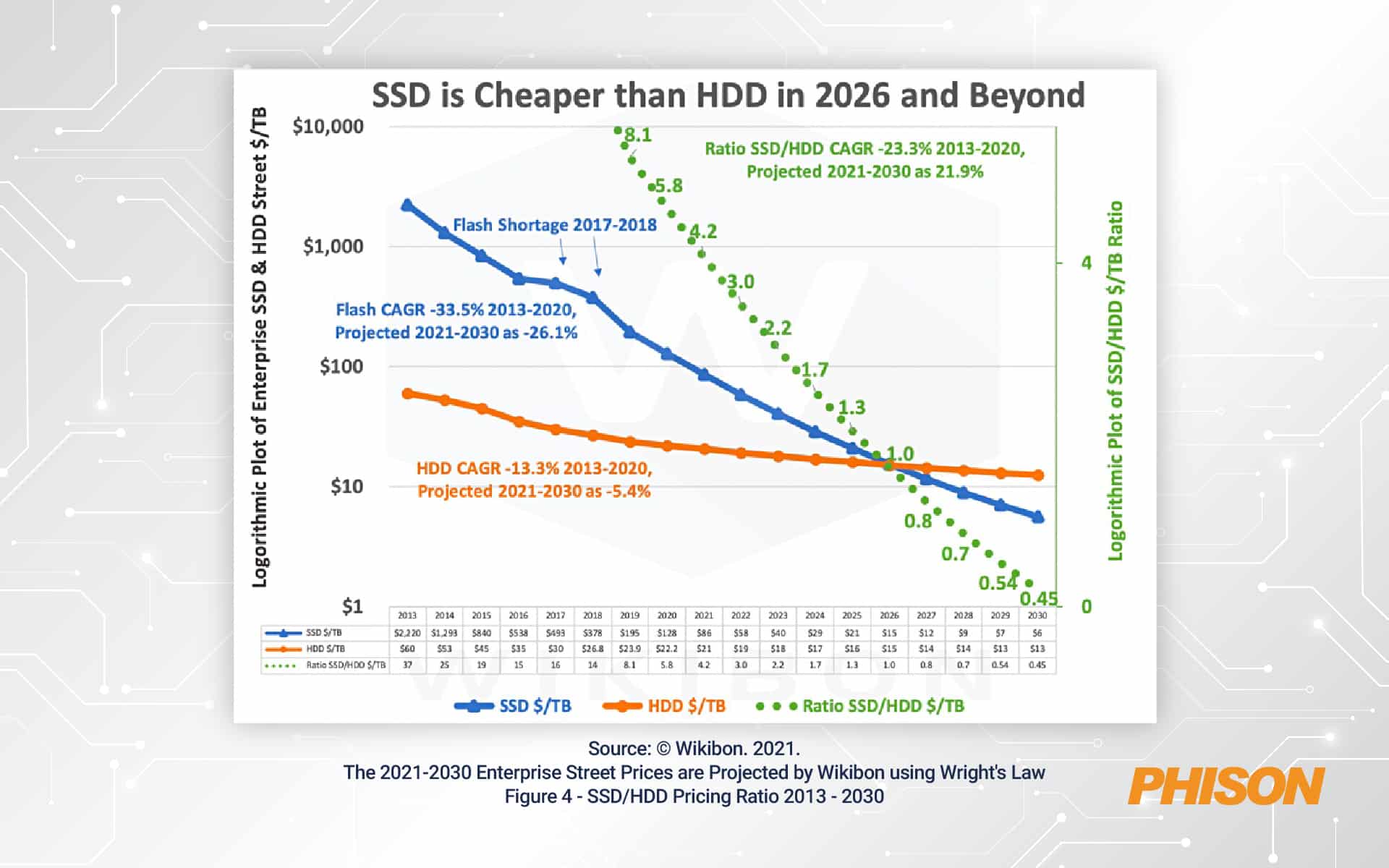

The scale of data storage required for AI and ML projects generally argues for a mix of storage solutions. A tiered approach is often best, with some lower-performing, lower-cost storage holding less relevant data. However, there has to be a high-performing tier as well, one that is likely to be larger, proportionally, than is usually found in a big data ecosystem.

This means deploying SSDs across a significant tier of the AI/ML storage environment. Only an SSD can deliver the performance and latency needed to support the rapid movement of massive amounts of data fed into AI and ML software at the training stage. As the process moves to inferencing, performance and latency grow even more important—especially if there is some criticality on the response time of the AI/ML system in another workflow. If people and other systems are waiting for a sluggish AI or ML system to complete its work, everyone suffers.

How Phison can help

Phison’s customizable SSD solutions deliver the kind of superior performance and flexibility needed for success with AI and ML workloads. Given that AI/ML storage tends to be more read- than write-intensive, Phison stands out as the only provider of a 2.5” 15.36 TB 7mm SATA SSD drive that is optimized for read-intensive applications at value price points.

As realized in the Phison ESR1710 series, it offers the highest rack storage densities and low power consumption—both essential ingredients of economical but high-performing storage needed by AI and ML. The unique dimensions of Phison’s 2.5” SATA SSD, which has the world’s highest capacity for an SSD of this size, make it possible to store up to 13 PB of data for AI and ML applications in a single 48U rack. This kind of density translates into favorable storage economics for AI and ML.

For AI/ML applications that require the absolute fastest PCIe Gen4x4 read and write speeds and with the industry’s lowest power consumption, Phison is now shipping the X1 SSD series in a U.3 form factor, which is backward compatible with U.2 slots, and has capacities of up to 15.36 TB.