A new approach to data storage delivers the high performance and low latency today’s organizations need.

As enterprises seek new ways to manage the flood of data they are now generating, they’re realizing that they need a new approach to data storage. Traditional direct-attached storage (DAS) is no longer efficient because it limits a server’s storage capacity based on the number of PCIe connections slots it has. And while today’s software-defined storage (SDS) is a step in the right direction—because it separates storage from computing hardware—it still has to rely on the server’s CPU to manage storage access as well as replication, running apps, data encryption and so on. That load on the CPU can lead to higher latency and poorer overall performance, which in effect again restricts how much storage the server can handle.

Now many organizations are increasingly embracing disaggregated storage infrastructure, which aims to deliver the large storage capacities they need without adding complexity or the need for additional hardware.

What is data storage disaggregation?

Storage disaggregation is a storage approach in which storage resources are separated, or decoupled, from compute resources. The infrastructure creates a shared pool of storage that can be accessed by an organization’s applications or other workloads over a network fabric.

Disaggregated storage is a type of composable infrastructure. That means it abstracts storage from the underlying hardware and the system manages the pool of storage through software. It makes scaling up or down fast and efficient as each application or workload gets the resources it needs regardless of where those resources are located. Compute resources are managed independently of storage resources so storage can be scaled on the fly without having to also increase unneeded compute resources.

How disaggregated storage works

The pool of storage resources in a disaggregated storage infrastructure are provisioned to applications and workloads across an organization’s IT ecosystem. A network fabric connects the storage devices, similar to storage-attached networks (SANs) but the difference here is that disaggregated storage pools connect all sources and types of data storage. Whether they’re HDDs or SSDs, for instance, or whether they’re local devices on-premises, on the edge in remote locations or even in the cloud.

The high-speed network fabric is a critical component of disaggregated storage. It allows the system’s compute nodes to gain access to low-latency, high-performance data storage and frees the CPU utilization from storage management and provisioning duties. Some organizations use protocols based on Ethernet as the connecting fabric, but today more and more organizations are turning to NVMe over Fabrics, or NVMe-oF.

NVMe-oF

NVMe-oF was created to extend the capabilities of NVMe storage over various network fabrics, such as RDMA (including InfiniBand, RoCE, iWARP), Ethernet and Fibre Channel, delivering faster data transfer, higher performance and enhanced security. Before this technology emerged, organizations relied on network connections such as iSCSI (Internet Small Computer Systems Interface), SAS (Serial Attached SCSI) and Fibre Channel Protocol (FCP). All of these connections were developed when hard disk drives and physical tape drives ruled the data center, so they limited the performance in more advanced flash drives such as SSDs.

NVMe is a transfer protocol that was developed specifically to connect SSDs to PCIe slots, so extending that capability to storage across a variety of network connections gives organizations the superior performance and high-speed data transfer capabilities they need at any time. It also unlocks the true power and performance of SSDs. Using NVMe-oF in a disaggregated storage system isolates SSDs from a server’s CPU and ensures the data they store is always accessible to compute nodes located anywhere in the world via the low-latency fabric.

The benefits of NVMe-oF include fast, efficient, remote access to storage; more efficient data transfer, which is especially critical for applications that require ultra-low latency; better support for NVMe SSDs that offer the performance modern workloads demand; easier scalability and flexibility; and support for advanced storage features, such as namespace sharing, multi-pathing and end-to-end data path protection.

Compute Express Link (CXL)

CXL is quickly becoming the industry interconnect standard for modern enterprises. It is cache-coherent, which means it keeps stored memory data consistent across CPUs, accelerators and other attached storage devices such as SSDs. With CXL, the system can share memory with higher performance and lower latency compared to PCIe. Essentially, CXL enables alternate protocols (meaning alternate to PCIe) to operate on the physical PCIe layer, providing the performance benefits and lower latency of PCIe.

While it isn’t a direct component of disaggregated data storage, CXL is greatly enhancing today’s disaggregated storage infrastructure thanks to its performance benefits. CXL-enabled NVMe SSDs in disaggregated storage can deliver performance that nears a system’s DRAM memory while providing massive amounts of storage capacity.

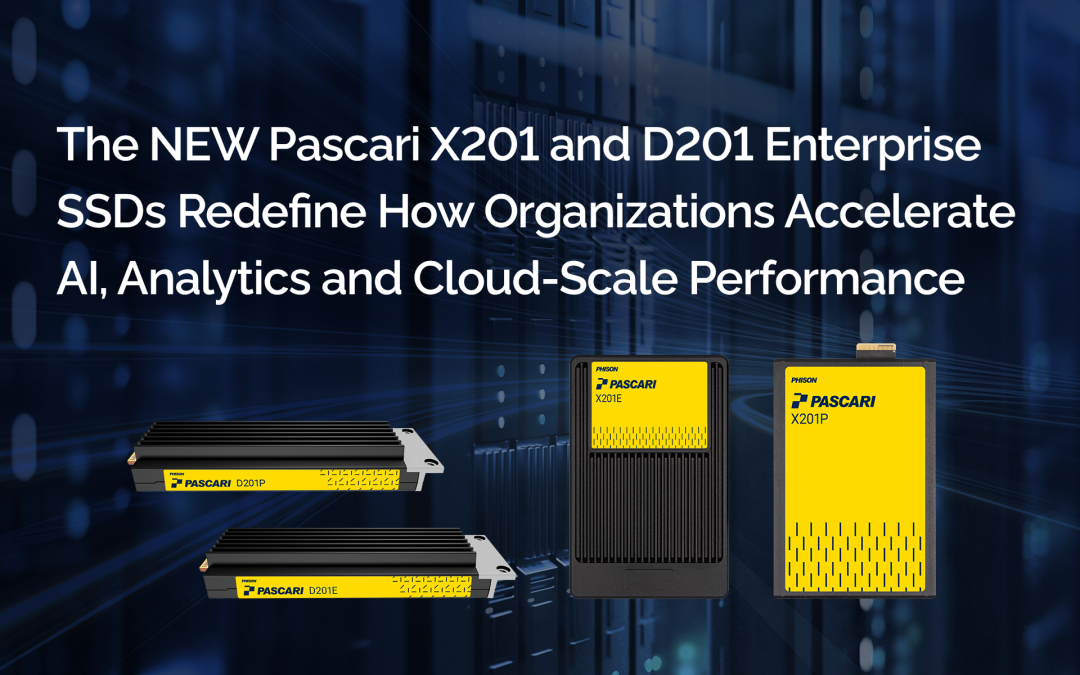

Choose Phison for SSDs with NVMe performance

As a global leader in NAND controllers and storage systems, Phison has extensive experience developing and designing SSDs for today’s demanding workloads and applications. The company has a great commitment to ongoing research to continually improve its products and solutions.

“Phison has focused industry-leading R&D efforts on developing in-house, chip-to-chip communication technologies since the introduction of the PCIe 3.0 protocol, with PCIe 4.0 and PCIe 5.0 solutions now in mass production, and PCIe 6.0 solutions now in the design phase,” said Michael Wu, President & General Manager, Phison US, in a 2023 press release. Phison has also recently gained recognition for its retimer solution, which is industry-compliant with CXL 2.0 standards.

Disaggregated data storage can help organizations more efficiently manage large volumes of data and use it to extract valuable insights. With data storage solutions from Phison, they can get the scalability, performance, efficiency and flexibility they need to stay competitive.

Frequently Asked Questions (FAQ) :

What problem does storage disaggregation solve in modern data centers?

Traditional DAS ties storage capacity to physical server slots, limiting scalability and increasing latency when CPUs handle both compute and storage tasks. Storage disaggregation removes this limitation by separating storage from compute resources, allowing shared, scalable pools of SSDs accessible across a high-speed network fabric.

How is disaggregated storage different from software defined storage (SDS)?

While SDS abstracts storage through software, it still relies on each server’s CPU for management tasks. Disaggregated storage offloads those duties to a network fabric, reducing CPU load, improving latency, and allowing resources to be dynamically allocated across multiple compute nodes.

What role does NVMe oF play in storage disaggregation?

NVMe oF extends NVMe’s low latency, high throughput protocol beyond local PCIe to network fabrics such as RDMA, RoCE, or Fibre Channel. It connects remote NVMe SSDs to compute nodes with near local performance, enabling flexible, high speed access to pooled storage.

Why are legacy protocols like iSCSI and SAS less efficient today?

iSCSI and SAS were designed for mechanical storage media, creating bottlenecks when used with SSDs. NVMe oF eliminates these inefficiencies, allowing flash based devices to achieve full parallelism and bandwidth potential through direct, streamlined communication paths.

How does Compute Express Link (CXL) enhance disaggregated infrastructure?

CXL introduces cache coherence between CPUs, accelerators, and storage devices. When combined with NVMe SSDs, CXL enables near DRAM performance with large memory pools, minimizing latency in AI and analytics workloads that demand rapid data access.

What advantages do Phison SSDs bring to disaggregated environments?

Phison’s enterprise grade SSDs are built around in house controller technology that optimizes latency, endurance, and data integrity. These drives support NVMe 4.0 and 5.0 today, with PCIe 6.0 in design, ensuring readiness for composable architectures requiring high throughput and predictable QoS.

How does Phison’s R&D on PCIe and CXL standards benefit OEMs?

Phison’s retimer and controller designs are fully compliant with CXL 2.0 and future PCIe 6.0 frameworks, enabling OEM partners to deploy high bandwidth, low power systems that scale efficiently across disaggregated topologies without performance loss over extended signal paths.

Why choose Phison as a co-design partner for NVMe oF deployments?

Phison collaborates directly with OEMs and hyperscale customers to tune firmware and controller logic for specific workloads, whether AI inference, HPC caching, or multi tenant storage nodes, ensuring optimized performance across composable infrastructures.

How does Phison ensure reliability in large scale disaggregated systems?

Through advanced error correction algorithms, end to end data path protection, and namespace sharing support, Phison SSDs deliver consistent uptime and predictable latency even under high IOPS, mixed workload conditions common in modern disaggregated architectures.

What industries benefit most from Phison powered disaggregated storage?

Sectors with data intensive operations such as AI and ML research, hyperscale cloud, autonomous systems, and financial analytics benefit from Phison’s controller level innovations that provide scalable, low latency, high bandwidth storage designed for composable data centers.