AI is everywhere in business today, from automated decision-making robots on the assembly line to chatbots working customer service to on-the-fly peak pricing optimization in the energy sector. The news is rife with stories of how AI is impacting the way we work and interact with each other across every industry.

What’s getting a lot of buzz lately, however, is the more specific niche of generative AI, the technology underlying hot content-, image- and video-creation apps such as ChatGPT, DALL-E and OpenAI’s Sora. Also sometimes referred to as GenAI, generative AI has the potential to transform business more noticeably than the other AI solutions supporting businesses today—but only if organizations can provide the right data storage that delivers the capacity and performance the technology demands.

What is generative AI?

Simply put, generative AI is AI that generates wholly new content, whether that’s chatbot responses, product designs, advertising collateral, images or video footage. While it’s the AI that’s responsible for ethically ambiguous deepfakes, it can also be used to automate written responses or content such as resumes or online profiles; recommend innovative drug compounds; optimize electronic chip designs; write music or novels in specific styles; make voice dubbing more accurate in movies; create new art in any requested style; create architectural designs based on certain parameters; and much more.

Generative AI differs from other types of AI in that it depends on components such as the following:

Large language models (LLMs)

An LLM is a program that processes, summarizes and generates text. It’s trained on massive data sets—with potentially trillions of parameters—and can learn to understand text and context. LLMs have been key in enabling generative AI models to vastly improve in content creation. They enable text to image or video, for instance, as well as the ability to caption images automatically.

Generative adversarial networks (GANs)

A GAN is composed of two neural networks that continually compete against each other to identify outputs that are noticeably artificial. One network is considered a generator and the other is a discriminator. The generator is programmed to create false or inaccurate outputs that look correct and the discriminator is tasked with identifying which outputs are false. Through this process—which doesn’t require human supervision—the generator gets better at creating realistic content and the discriminator gets better at detecting it. Over time, the generated content becomes ever more realistic until the discriminator can no longer detect inaccuracies.

Transformers

This type of neural network enables very large training models to parse massive volumes of data that doesn’t need to be labeled beforehand. That means an AI algorithm can chew through millions or even billions of text-based pages to give the model deeper “knowledge.” Transformers make it possible for models to identify and understand connections between words, for instance, in a piece of content—such as understanding context between individual sentences in a book. Models can also become aware of connections and context between specific proteins or chemical substances, lines of code and even DNA markers.

Unlike traditional AI, which typically follows a predetermined set of steps to parse data and come up with results, generative AI usually allows a user to enter a prompt or query to start generating content. For instance, you could ask the application to create a short essay on the events that led up to World War II. Ask for original art that depicts daily life in eighteenth-century Australia. Or describe a scene in text and see it come to life in realistic video. Generative AI is designed for new content creation rather than completing tasks based on rules and preset outcomes.

How GenAI works—and why data storage matters

AI of any type typically involves enormous amounts of data, and generative AI probably requires even more. There are two stages in AI projects, including GenAI, and in each one researchers must manage and handle massive datasets.

Training stage

To train generative AI algorithms, researchers feed them lots and lots of data. This includes online web content, books, videos, images, reports, social media content and much more. The AI platform must be able to store that data. The AI algorithm analyzes this collection of content and identifies connections, context, patterns and so on. It creates mathematical models around those patterns and connections and continually refines those models as it receives more data. LLMs delve into their datasets over and over again to increase understanding and awareness of patterns and meanings.

The workloads created by AI training are immense and complex. They require ultra-high-performance for both reading and writing to storage at the same time. The hardware and software that support those workloads must be able to keep up.

Inferencing stage

After a GenAI algorithm is trained, it is then ready for users to make queries and request content output. These tasks require high-performance read capabilities because the AI system must apply the query to billions or trillions of parameters in storage to create the best response in just seconds. The nature of this stage also means most systems must have parallel data paths to reach the speeds and performance users expect.

Data storage factors to consider for generative AI

To support the oversized storage needs of generative AI, organizations are having to rethink their data storage and management practices. Many organizations are opting for a hybrid approach to data storage and capitalizing on the benefits of having both cloud and on-premises storage to enable AI projects.

AI-friendly data storage typically includes the following:

-

-

- High capacity – petabytes are a starting point

- Ultra-high performance – enabled by low latency and high IOPS and throughput

- Parallel processing – ideally connected to large compute arrays and multiple independent networks

-

To achieve the performance that generative AI requires, many organizations are turning to flash-based SSDs for their on-premises arrays. It’s possible to use hard disk drives for AI data storage but flash is considered optimal. In fact, one expert from NAND Research, an industry analyst firm, recently stated, “[Organizations] that are serious about large language models, they’re buying high-end flash storage.”

With SSDs, organizations can provide the high IOPS they need in a smaller footprint with less energy consumption. SSDs are also a good choice for high-performance object storage, which is typically the storage type of choice for AI projects.

Even hyperscalers, such as AWS, Azure and Google Cloud Platform, are turning to flash-based systems with SSDs to provide the performance customers want.

Phison offers innovative data storage for generative AI

As organizations increasingly realize the value of generative AI and how it can help their business, Phison has continued to invest in R&D and innovation to meet their evolving data storage needs.

Phison knows AI and the type of storage it requires to be a success. To that end, the company has launched IMAGIN+, a proprietary customization service that includes AI computational models and solutions for AI services.

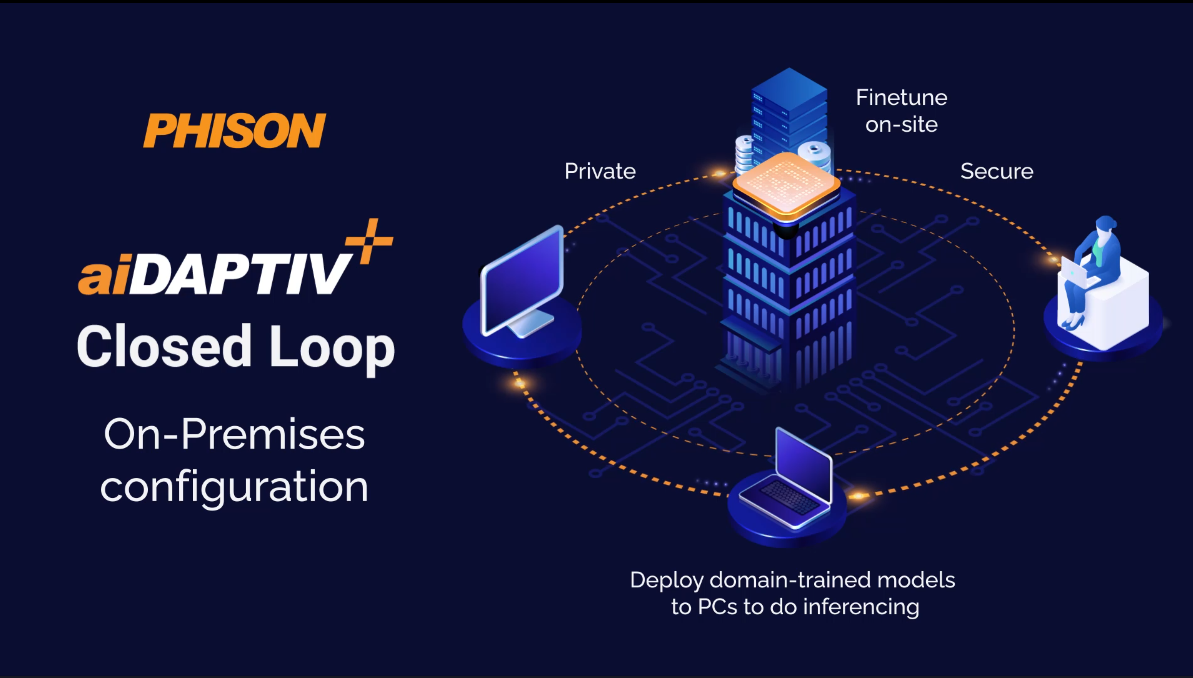

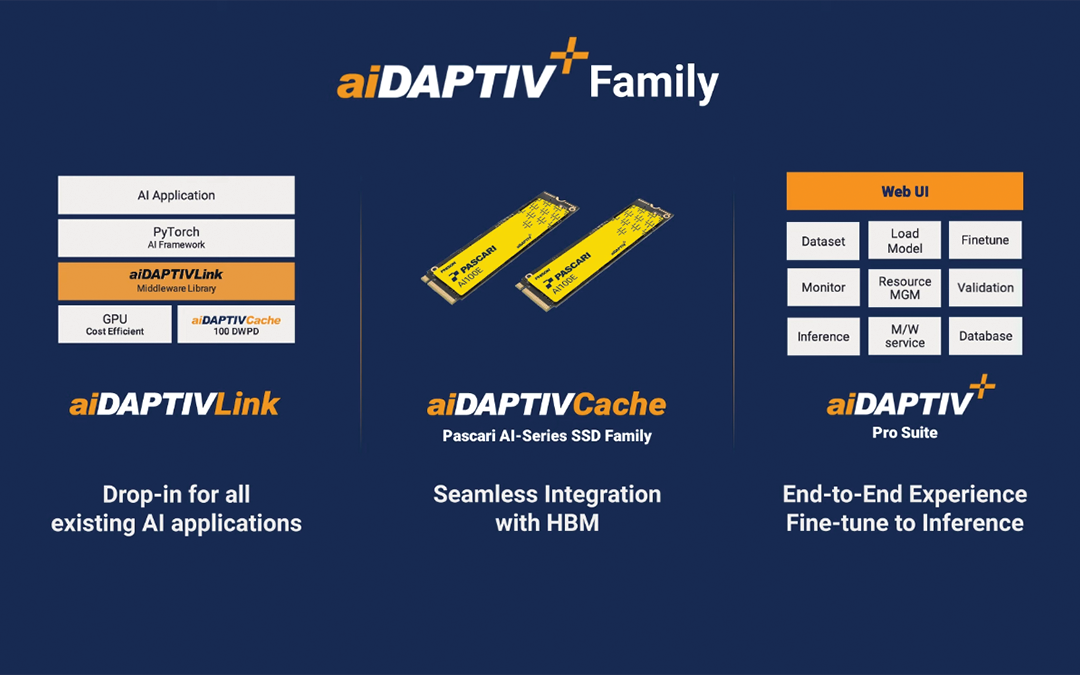

The company also introduced aiDAPTIV+, an expansion of IMAGIN+. The new services take advantage of Phison’s “innovative integration of SSDs into the AI computing framework and expands NAND storage solutions in the AI application market.”

By integrating SSDs into the AI computing framework, Phison helps improve operational performance of AI hardware solutions and brings down the cost of AI projects by reducing reliance on GPUs and DRAM. Phison SSDs can act as offload support and allow organizations to train their generative AI models with less need for GPUs and DRAM.

With aiDAPTIV+ solutions from Phison, businesses of all sizes can benefit from generative AI while maintaining control over their proprietary data. No longer do organizations need to spend millions of dollars to acquire so much specialized hardware and GPUs train AI on their data.

Generative AI has the potential to completely transform business operations, product design, customer service, marketing efforts and much more across every industry. With flash-based storage and SSDs from Phison, you can prepare your organization to embrace that transformation.

Additional AI Content from Phison