Find out why high-performance storage is critical to getting the most effective real-time AI insights—and how Phison makes it easy.

AI is transforming how organizations interact with their data. But the next big leap isn’t just in model development. It’s in how those models access, retrieve and synthesize information on demand. That’s where retrieval-augmented generation (RAG) comes in.

RAG combines traditional generative AI with the ability to retrieve relevant context from external data sources in real time. This hybrid approach enables more accurate, up-to-date and context-aware responses, making it ideal for applications like enterprise search, conversational AI, customer support and scientific research. However, for RAG to deliver real value at scale, it needs more than just smart models. It needs exceptional storage performance to support fast, seamless access to large, unstructured datasets.

What makes RAG so demanding?

Unlike conventional generative models, which operate solely on pre-trained parameters, RAG injects external knowledge into the inference process. When a user query comes in, the system first retrieves relevant documents from a knowledge base, then feeds both the query and the retrieved data into a large language model (LLM) to generate a response.

This two-step process means the model must interact with massive, often heterogeneous datasets, ranging from internal wikis and support logs to academic journals and transaction records. These datasets need to be stored in a way that supports:

-

-

- Low-latency retrieval of relevant content

- High-throughput processing for inference pipelines

- Rapid updates and indexing for continuously evolving data sources

- Scalability to accommodate growing AI knowledge bases

-

Traditional storage simply can’t keep up. Hard drives introduce bottlenecks. Legacy SSDs may offer decent read speeds but can fall short on endurance or throughput when scaling across GPU-powered AI clusters. RAG workloads need something faster, smarter and more resilient.

The role of SSDs in accelerating AI and RAG workflows

Storage is the invisible engine behind modern AI. And when it comes to RAG, great storge performance is a must.

High-performance NVMe SSDs deliver the ultra-low latency and high input/output operations per second (IOPS) that RAG pipelines depend on. They enable:

-

-

- Fast vector searches across massive embeddings using similarity search libraries like FAISS or Vespa

- Rapid pre-processing and post-processing stages in the AI workflow

- Seamless parallelism, where multiple GPUs can be saturated with data without I/O contention

- Minimal inference latency, critical for customer-facing or real-time AI applications

-

Next-gen SSDs further improve on this by leveraging PCIe Gen5 interfaces, offering bandwidths of up to 60 GB/s per lane, more than enough to saturate high-throughput AI systems and feed GPUs at full speed.

Why data-centric architecture matters in RAG

AI processing has shifted from being compute-centric to data-centric. In RAG pipelines, performance is often limited not by the model itself but by the speed and intelligence of the data flow. That includes:

-

-

- Ingestion – How quickly new data can be indexed and made retrievable

- Access – How fast relevant context can be fetched during inference

- Lifecycle management – How efficiently datasets are moved between hot, warm and cold storage tiers

-

This is where next-generation SSDs, especially those engineered for AI use cases, become indispensable. They offer not only raw speed but also advanced endurance, intelligent caching and the ability to handle mixed workloads with consistency.

How Phison helps you build AI at speed and scale

In the race to deliver smarter, faster and more trustworthy AI, it’s not just about model weights and training data. It’s about how well your infrastructure can feed those models the right information at the right time. RAG is leading a shift toward more context-aware AI, but to make it viable at scale, you need a storage layer that moves just as fast as your thinking machines.

Phison’s portfolio of next-gen SSDs is designed specifically for the evolving needs of AI and RAG workflows. Engineered for low latency, high endurance and AI-optimized throughput, these SSDs empower organizations to extract maximum performance from their AI infrastructure, whether it’s on-premises, in a hybrid cloud or at the edge.

Phison also delivers end-to-end support for AI storage architecture, helping enterprises:

-

-

- Design high-performance storage stacks tailored to LLM and RAG pipelines

- Implement intelligent tiering to balance speed and cost

- Enable data locality strategies to reduce latency and network dependency

- Future-proof infrastructure with PCIe Gen5-ready devices and advanced firmware tuning

-

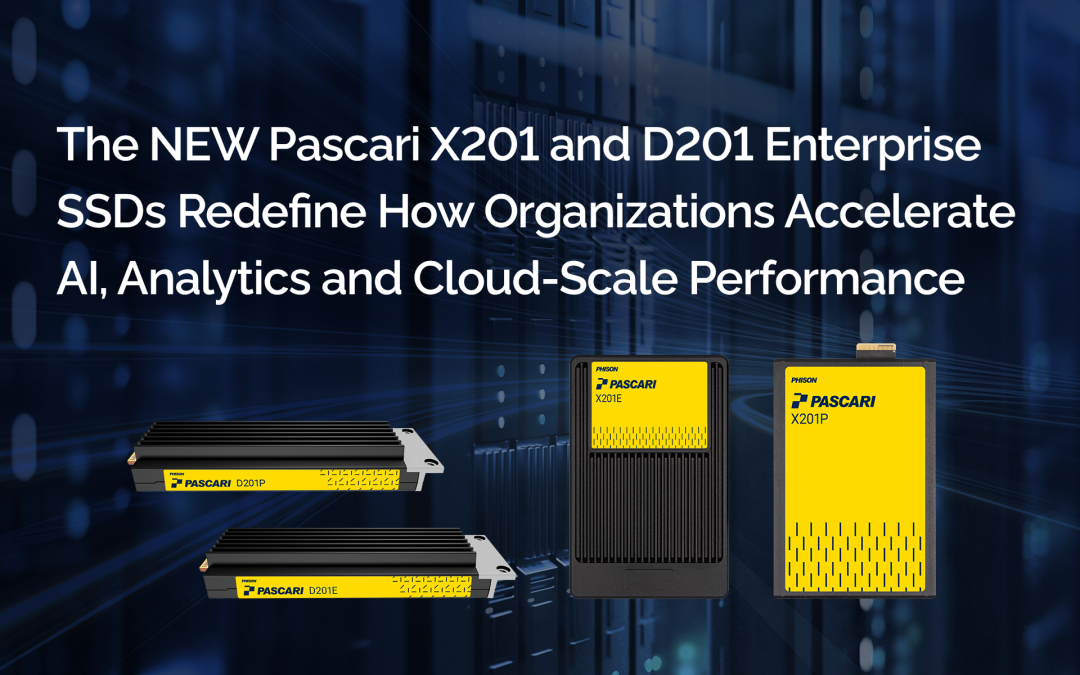

Organizations leveraging Phison’s AI-optimized persistent storage Pascari enterprise SSDs and aiDAPTIV+ cache memory SSDs deliver faster time to insight, smoother model deployments and more agile response to changing data needs. With the speed, resilience and intelligence required to power today’s

most advanced AI architectures, Phison isn’t just keeping up with the pace of innovation, they’re helping to set it.

Frequently Asked Questions (FAQ) :

What was the focus of Phison’s participation at AI Infrastructure Tech Field Day?

Phison focused on practical challenges institutions face when deploying AI inference and model training on-premises. The sessions addressed GPU memory constraints, infrastructure cost barriers, and the complexity of running large language models locally. Phison introduced aiDAPTIV as a controller-level solution designed to simplify AI deployment while reducing dependency on high-cost GPU memory.

What is the TechStrong TV “director’s highlights” webinar?

TechStrong TV produced a curated highlights cut from Phison’s Tech Field Day sessions, presented as a Tech Field Day Insider webinar. This format distills the most relevant technical insights and includes expert panel commentary, making it easier for IT and research leaders to grasp the architectural implications without watching full-length sessions.

Who are the Phison speakers featured in the webinar?

The webinar highlights two Phison technical leaders:

- Brian Cox, Director of Solution and Product Marketing, who covers affordable on-premises LLM training and inference.

- Sebastien Jean, CTO, who explains GPU memory offload techniques for LLM fine-tuning and inference using aiDAPTIV.

Why is on-premises AI important for universities and research institutions?

On-premises AI enables institutions to maintain data sovereignty, meet compliance requirements, and protect sensitive research data. It also reduces long-term cloud costs and provides predictable performance for AI workloads used in research, teaching, and internal operations.

What are the main infrastructure challenges discussed in the webinar?

Key challenges include limited GPU memory capacity, escalating infrastructure costs, and the complexity of deploying and managing LLMs locally. These constraints often prevent institutions from scaling AI initiatives beyond pilot projects.

How does Phison aiDAPTIV enable affordable on-prem AI training and inference?

Phison aiDAPTIV extends GPU memory using high-performance NVMe storage at the controller level. This allows large models to run on existing hardware without requiring additional GPUs or specialized coding, significantly lowering the cost barrier for local AI deployment.

What does “GPU memory offload” mean in practical terms?

GPU memory offload allows AI workloads to transparently use NVMe storage when GPU memory is saturated. For researchers and IT teams, this means larger models can be trained or fine-tuned without redesigning pipelines or rewriting code.

Does aiDAPTIV require changes to existing AI frameworks or code?

No. aiDAPTIV operates at the system and storage layer, enabling AI workloads to scale without modifying model code or AI frameworks. This is especially valuable for academic teams using established research workflows.

How does this solution help control AI infrastructure budgets?

By reducing reliance on expensive high-capacity GPUs and enabling better utilization of existing hardware, aiDAPTIV lowers capital expenditure while extending system lifespan. This makes advanced AI workloads more accessible to budget-constrained institutions.

Why should higher education stakeholders watch this webinar?

The webinar provides a real-world blueprint for deploying private, on-premises AI at scale. It offers actionable insights into lowering costs, improving resource efficiency, and enabling secure AI research and experimentation without cloud lock-in.